Sunday, January 10, 2016

Probability (P)

It is necessary to look at the cause of a failure mode and the likelihood of occurrence. This can be done by analysis, calculations / FEM, looking at similar items or processes and the failure modes that have been documented for them in the past. A failure cause is looked upon as a design weakness. All the potential causes for a failure mode should be identified and documented. This should be in technical terms. Examples of causes are: Human errors in handling, Manufacturing induced faults, Fatigue, Creep, Abrasive wear, erroneous algorithms, excessive voltage or improper operating conditions or use (depending on the used ground rules). A failure mode is given a Probability Ranking.

| Rating | Meaning |

|---|---|

| A | Extremely Unlikely (Virtually impossible or No known occurrences on similar products or processes, with many running hours) |

| B | Remote (relatively few failures) |

| C | Occasional (occasional failures) |

| D | Reasonably Possible (repeated failures) |

| E | Frequent (failure is almost inevitable) |

Severity (S)

Determine the Severity for the worst-case scenario adverse end effect (state). It is convenient to write these effects down in terms of what the user might see or experience in terms of functional failures. Examples of these end effects are: full loss of function x, degraded performance, functions in reversed mode, too late functioning, erratic functioning, etc. Each end effect is given a Severity number (S) from, say, I (no effect) to VI (catastrophic), based on cost and/or loss of life or quality of life. These numbers prioritize the failure modes (together with probability and detectability). Below a typical classification is given. Other classifications are possible. See also hazard analysis.

| Rating | Meaning |

|---|---|

| I | No relevant effect on reliability or safety |

| II | Very minor, no damage, no injuries, only results in a maintenance action (only noticed by discriminating customers) |

| III | Minor, low damage, light injuries (affects very little of the system, noticed by average customer) |

| IV | Moderate, moderate damage, injuries possible (most customers are annoyed, mostly financial damage) |

| V | Critical (causes a loss of primary function; Loss of all safety Margins, 1 failure away from a catastrophe, severe damage, severe injuries, max 1 possible death ) |

| VI | Catastrophic (product becomes inoperative; the failure may result in complete unsafe operation and possible multiple deaths) |

Detection (D)

The means or method by which a failure is detected, isolated by operator and/or maintainer and the time it may take. This is important for maintainability control (Availability of the system) and it is especially important for multiple failure scenarios. This may involve dormant failure modes (e.g. No direct system effect, while a redundant system / item automatic takes over or when the failure only is problematic during specific mission or system states) or latent failures (e.g. deterioration failure mechanisms, like a metal growing crack, but not a critical length). It should be made clear how the failure mode or cause can be discovered by an operator under normal system operation or if it can be discovered by the maintenance crew by some diagnostic action or automatic built in system test. A dormancy and/or latency period may be entered.

| Rating | Meaning |

|---|---|

| 1 | Certain - fault will be caught on test |

| 2 | Almost certain |

| 3 | High |

| 4 | Moderate |

| 5 | Low |

| 6 | Fault is undetected by Operators or Maintainers |

Dormancy or Latency Period

The average time that a failure mode may be undetected may be entered if known. For example:

- Seconds, auto detected by maintenance computer

- 8 hours, detected by turn-around inspection

- 2 months, detected by scheduled maintenance block X

- 2 years, detected by overhaul task x

Indication

If the undetected failure allows the system to remain in a safe / working state, a second failure situation should be explored to determine whether or not an indication will be evident to all operators and what corrective action they may or should take.

Indications to the operator should be described as follows:

- Normal. An indication that is evident to an operator when the system or equipment is operating normally.

- Abnormal. An indication that is evident to an operator when the system has malfunctioned or failed.

- Incorrect. An erroneous indication to an operator due to the malfunction or failure of an indicator (i.e., instruments, sensing devices, visual or audible warning devices, etc.).

After these three basic steps the Risk level may be provided.

Risk level (P*S) and (D)

Risk is the combination of End Effect Probability And Severity where probability and severity includes the effect on non-detectability (dormancy time). This may influence the end effect probability of failure or the worst case effect Severity. The exact calculation may not be easy in all cases, such as those where multiple scenarios (with multiple events) are possible and detectability / dormancy plays a crucial role (as for redundant systems). In that case Fault Tree Analysis and/or Event Trees may be needed to determine exact probability and risk levels.

Preliminary Risk levels can be selected based on a Risk Matrix like shown below, based on Mil. Std. 882.[24] The higher the Risk level, the more justification and mitigation is needed to provide evidence and lower the risk to an acceptable level. High risk should be indicated to higher level management, who are responsible for final decision-making.

| Probability / Severity --> | I | II | III | IV | V | VI |

|---|---|---|---|---|---|---|

| A | Low | Low | Low | Low | Moderate | High |

| B | Low | Low | Low | Moderate | High | Unacceptable |

| C | Low | Low | Moderate | Moderate | High | Unacceptable |

| D | Low | Moderate | Moderate | High | Unacceptable | Unacceptable |

| E | Moderate | Moderate | High | Unacceptable | Unacceptable | Unacceptable |

The FMEA should be updated whenever:

- A new cycle begins (new product/process)

- Changes are made to the operating conditions

- A change is made in the design

- New regulations are instituted

- Customer feedback indicates a problem

Uses

- Development of system requirements that minimize the likelihood of failures.

- Development of designs and test systems to ensure that the failures have been eliminated or the risk is reduced to acceptable level.

- Development and evaluation of diagnostic systems

- To help with design choices (trade-off analysis).

Advantages

- Improve the quality, reliability and safety of a product/process

- Improve company image and competitiveness

- Increase user satisfaction

- Reduce system development time and cost

- Collect information to reduce future failures, capture engineering knowledge

- Reduce the potential for warranty concerns

- Early identification and elimination of potential failure modes

- Emphasize problem prevention

- Minimize late changes and associated cost

- Catalyst for teamwork and idea exchange between functions

- Reduce the possibility of same kind of failure in future

- Reduce impact on company profit margin

- Improve production yield

- Maximizes profit

References

- Jump up System Reliability Theory: Models, Statistical Methods, and Applications, Marvin Rausand & Arnljot Hoylan, Wiley Series in probability and statistics - second edition 2004, page 88

- Jump upProject Reliability Group (July 1990). Koch, John E., ed. Jet Propulsion Laboratory Reliability Analysis Handbook (pdf). Pasadena, California: Jet Propulsion Laboratory. JPL-D-5703. Retrieved 2013-08-25.

- Jump up Goddard Space Flight Center (GSFC) (1996-08-10). Performing a Failure Mode and Effects Analysis (pdf). Goddard Space Flight Center. 431-REF-000370. Retrieved 2013-08-25.

- Jump up Langford, J. W. (1995). Logistics: Principles and Applications. McGraw Hill. p. 488.

- Jump up United States Department of Defense (9 November 1949). MIL-P-1629 - Procedures for performing a failure mode effect and critical analysis. Department of Defense (US). MIL-P-1629.

- Jump up United States Department of Defense (24 November 1980). MIL-STD-1629A - Procedures for performing a failure mode effect and criticality analysis. Department of Defense (USA). MIL-STD-1629A.

- Jump up Neal, R.A. (1962). Modes of Failure Analysis Summary for the Nerva B-2 Reactor (PDF). Westinghouse Electric Corporation Astronuclear Laboratory. WANL–TNR–042. Retrieved 2010-03-13.

- Jump up Dill, Robert; et al. (1963). State of the Art Reliability Estimate of Saturn V Propulsion Systems (PDF). General Electric Company. RM 63TMP–22. Retrieved 2010-03-13.

- Jump upProcedure for Failure Mode, Effects and Criticality Analysis (FMECA) (PDF). National Aeronautics and Space Administration. 1966. RA–006–013–1A. Retrieved 2010-03-13.

- Jump upFailure Modes, Effects, and Criticality Analysis (FMECA) (PDF). National Aeronautics and Space Administration JPL. PD–AD–1307. Retrieved 2010-03-13.

- Jump up Experimenters' Reference Based Upon Skylab Experiment Management (PDF). National Aeronautics and Space Administration George C. Marshall Space Flight Center. 1974. M–GA–75–1. Retrieved 2011-08-16.

- Jump up Design Analysis Procedure For Failure Modes, Effects and Criticality Analysis (FMECA). Society for Automotive Engineers. 1967. ARP926.

- Jump up Dyer, Morris K.; Dewey G. Little; Earl G. Hoard; Alfred C. Taylor; Rayford Campbell (1972). Applicability of NASA Contract Quality Management and Failure Mode Effect Analysis Procedures to the USFS Outer Continental Shelf Oil and Gas Lease Management Program (PDF). National Aeronautics and Space Administration George C. Marshall Space Flight Center. TM X–2567. Retrieved2011-08-16.

Reliability Engineering

Reliability engineering is engineering that emphasizes dependability in the lifecycle management of a product. Dependability, or reliability, describes the ability of a system or component to function under stated conditions for a specified period of time. Reliability may also describe the ability to function at a specified moment or interval of time (Availability). Reliability engineering represents a sub-discipline within systems engineering. Reliability is theoretically defined as the probability of success (Reliability=1-Probability of Failure), as the frequency of failures; or in terms of availability, as a probability derived from reliability, testability and maintainability. Testability, Maintainability and maintenance are often defined as a part of "reliability engineering" in Reliability Programs. Reliability plays a key role in the cost-effectiveness of systems.

Reliability engineering relates closely to safety engineering and to system safety, in that they use common methods for their analysis and may require input from each other. Reliability engineering focuses on costs of failure caused by system downtime, cost of spares, repair equipment, personnel, and cost of warranty claims. Safety engineering normally emphasizes not cost, but preserving life and nature, and therefore deals only with particular dangerous system-failure modes. High reliability (safety factor) levels also result from good engineering and from attention to detail, and almost never from only reactive failure management (reliability accounting / statistics).

A former United States Secretary of Defense, economist James R. Schlesinger, once stated: "Reliability is, after all, engineering in its most practical form."

History

The word reliability can be traced back to 1816, by poet Coleridge.Before World War II the name has been linked mostly to repeatability. A test (in any type of science) was considered reliable if the same results would be obtained repeatedly. In the 1920s product improvement through the use of statistical process control was promoted by Dr. Walter A. Shewart at Bell Labs.reliability was defined by the U.S. military in the 1940s and evolved to the present. It initially came to mean that a product would operate when expected (nowadays called "mission readiness") and for a specified period of time. In the time around the WWII and later, many reliability issues were due to inherent unreliability of electronics and to fatigue issues. In 1945, M.A. Miner published the seminal paper titled “Cumulative Damage in Fatigue” in an ASME journal. A main application for reliability engineering in the military was for the vacuum tube as used in radar systems and other electronics, for which reliability has proved to be very problematic and costly. The IEEE formed the Reliability Society in 1948. In 1950, on the military side, a group called the Advisory Group on the Reliability of Electronic Equipment, AGREE, was born. This group recommended the following 3 main ways of working:

Around this time Wallodi Weibull was working on statistical models for fatigue. The development of reliability engineering was here on a parallel path with quality. The modern use of the word- Improve Component Reliability

- Establish quality and reliability requirements (also) for suppliers

- Collect field data and find root causes of failures

In the 1960s more emphasis was given to reliability testing on component and system level. The famous military standard 781 was created at that time. Around this period also the much-used (and also much-debated) military handbook 217 was published by RCA (Radio Corporation of America) and was used for the prediction of failure rates of components. The emphasis on component reliability and empirical research (e.g. Mil Std 217) alone slowly decreases. More pragmatic approaches, as used in the consumer industries, are being used. The 1980s was a decade of great changes. Televisions had become all semiconductor. Automobiles rapidly increased their use of semiconductors with a variety of microcomputers under the hood and in the dash. Large air conditioning systems developed electronic controllers, as had microwave ovens and a variety of other appliances. Communications systems began to adopt electronics to replace older mechanical switching systems. Bellcore issued the first consumer prediction methodology for telecommunications, and SAE developed a similar document SAE870050 for automotive applications. The nature of predictions evolved during the decade, and it became apparent that die complexity wasn't the only factor that determined failure rates for Integrated Circuits (ICs). Kam Wong published a paper questioning the bathtub curve . During this decade, the failure rate of many components dropped by a factor of 10. Software became important to the reliability of systems. By the 1990s, the pace of IC development was picking up. Wider use of stand-alone microcomputers was common, and the PC market helped keep IC densities following Moore’s Law and doubling about every 18 months. Reliability Engineering now was more changing towards understanding the physics of failure. Failure rates for components kept on dropping, but system-level issues became more prominent. Systems Thinking became more and more important. For software, the CCM model (Capability Maturity Model) was developed, which gave a more qualitative approach to reliability. ISO 9000 added reliability measures as part of the design and development portion of Certification. The expansion of the World-Wide Web created new challenges of security and trust. The older problem of too little reliability information available had now been replaced by too much information of questionable value. Consumer reliability problems could now have data and be discussed online in real time. New technologies such as micro-electromechanical systems (MEMS), handheld GPS, and hand-held devices that combined cell phones and computers all represent challenges to maintain reliability. Product development time continued to shorten through this decade and what had been done in three years was being done in 18 months. This meant that reliability tools and tasks must be more closely tied to the development process itself. In many ways, reliability became part of everyday life and consumer expectations.

Fault Tree Analysis

Fault tree analysis (FTA) is a top down, deductive failure analysis in which an undesired state of a system is analyzed using Boolean logic to combine a series of lower-level events. This analysis method is mainly used in the fields of safety engineering and reliability engineering to understand how systems can fail, to identify the best ways to reduce risk or to determine (or get a feeling for) event rates of a safety accident or a particular system level (functional) failure. FTA is used in the aerospace, nuclear power, chemical and process, pharmaceutical, petrochemical and other high-hazard industries; but is also used in fields as diverse as risk factor identification relating to social service system failure. FTA is also used in software engineering for debugging purposes and is closely related to cause-elimination technique used to detect bugs.

In aerospace, the more general term "system Failure Condition" is used for the "undesired state" / Top event of the fault tree. These conditions are classified by the severity of their effects. The most severe conditions require the most extensive fault tree analysis. These "system Failure Conditions" and their classification are often previously determined in the functional Hazard analysis.

Usage

Fault Tree Analysis can be used to:

- understand the logic leading to the top event / undesired state.

- show compliance with the (input) system safety / reliability requirements.

- prioritize the contributors leading to the top event - Creating the Critical Equipment/Parts/Events lists for different importance measures.

- monitor and control the safety performance of the complex system (e.g., is a particular aircraft safe to fly when fuel valve x malfunctions? For how long is it allowed to fly with the valve malfunction?).

- minimize and optimize resources.

- assist in designing a system. The FTA can be used as a design tool that helps to create (output / lower level) requirements.

- function as a diagnostic tool to identify and correct causes of the top event. It can help with the creation of diagnostic manuals / processes.

Graphic Symbols

The basic symbols used in FTA are grouped as events, gates, and transfer symbols. Minor variations may be used in FTA software.

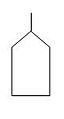

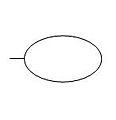

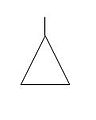

Event Symbols

Event symbols are used for primary events and intermediate events. Primary events are not further developed on the fault tree. Intermediate events are found at the output of a gate. The event symbols are shown below:

The primary event symbols are typically used as follows:

- Basic event - failure or error in a system component or element (example: switch stuck in open position)

- External event - normally expected to occur (not of itself a fault)

- Undeveloped event - an event about which insufficient information is available, or which is of no consequence

- Conditioning event - conditions that restrict or affect logic gates (example: mode of operation in effect)

An intermediate event gate can be used immediately above a primary event to provide more room to type the event description. FTA is top to bottom approach.

Gate Symbols

Gate symbols describe the relationship between input and output events. The symbols are derived from Boolean logic symbols:

The gates work as follows:

- OR gate - the output occurs if any input occurs

- AND gate - the output occurs only if all inputs occur (inputs are independent)

- Exclusive OR gate - the output occurs if exactly one input occurs

- Priority AND gate - the output occurs if the inputs occur in a specific sequence specified by a conditioning event

- Inhibit gate - the output occurs if the input occurs under an enabling condition specified by a conditioning event

Transfer Symbols

Transfer symbols are used to connect the inputs and outputs of related fault trees, such as the fault tree of a subsystem to its system. NASA prepared a complete document about FTA through practical incidents.

Failure

Failure causes are defects in design, process, quality, or part application, which are the underlying cause of a failure or which initiate a process which leads to failure. Where failure depends on the user of the product or process, then human error must be considered.

Component Failure

Failure Scenario

A scenario is the complete identified possible sequence and combination of events, failures (failure modes), conditions, system states, leading to an end (failure)system state. It starts from causes (if known) leading to one particular end effect (the system failure condition). A failure scenario is for a system the same as the failure mechanism is for a component. Both result in a failure mode (state) of the system / component.

The term is part of the engineering lexicon, especially of engineers working to test and debug products or processes. Carefully observing and describing failure conditions, identifying whether failures are reproducible or transient, and hypothesizing what combination of conditions and sequence of events led to failure is part of the process of fixing design flaws or improving future iterations. The term may be applied to mechanical systems failure.

Mechanical Failure

Some types of mechanical failure mechanisms are: excessive deflection, buckling, ductile fracture, brittle fracture, impact, creep, relaxation, thermal shock, wear, corrosion, stress corrosion cracking, and various types of fatigue. Each produces a different type of fracture surface, and other indicators near the fracture surface(s). The way the product is loaded, and the loading history are also important factors which determine the outcome. Of critical importance is design geometry because stress concentrations can magnify the applied load locally to very high levels, and from which cracks usually grow.

Over time, as more is understood about a failure, the failure cause evolves from a description of symptoms and outcomes (that is, effects) to a systematic and relatively abstract model of how, when, and why the failure comes about (that is, causes).

The more complex the product or situation, the more necessary a good understanding of its failure cause is to ensuring its proper operation (or repair). Cascading failures, for example, are particularly complex failure causes. Edge cases and corner cases are situations in which complex, unexpected, and difficult-to-debug problems often occur.

Basic Terms

The following covers some basic FMEA terminology.

- Failure

- The loss of a function under stated conditions.

- Failure mode

- The specific manner or way by which a failure occurs in terms of failure of the item (being a part or (sub) system) function under investigation; it may generally describe the way the failure occurs. It shall at least clearly describe a (end) failure state of the item (or function in case of a Functional FMEA) under consideration. It is the result of the failure mechanism (cause of the failure mode). For example; a fully fractured axle, a deformed axle or a fully open or fully closed electrical contact are each a separate failure mode.

Failure cause and/or mechanism

- Defects in requirements, design, process, quality control, handling or part application, which are the underlying cause or sequence of causes that initiate a process (mechanism) that leads to a failure mode over a certain time. A failure mode may have more causes. For example; "fatigue or corrosion of a structural beam" or "fretting corrosion in an electrical contact" is a failure mechanism and in itself (likely) not a failure mode. The related failure mode (end state) is a "full fracture of structural beam" or "an open electrical contact". The initial cause might have been "Improper application of corrosion protection layer (paint)" and /or "(abnormal) vibration input from another (possibly failed) system".

- Failure effect

- Immediate consequences of a failure on operation, function or functionality, or status of some item.

- Indenture levels (bill of material or functional breakdown)

- An identifier for system level and thereby item complexity. Complexity increases as levels are closer to one.

- Local effect

- The failure effect as it applies to the item under analysis.

- Next higher level effect

- The failure effect as it applies at the next higher indenture level.

- End effect

- The failure effect at the highest indenture level or total system.

- Detection

- The means of detection of the failure mode by maintainer, operator or built in detection system, including estimated dormancy period (if applicable)

- Risk Priority Number (RPN)

- Severity (of the event) * Probability (of the event occurring) * Detection (Probability that the event would not be detected before the user was aware of it)

- Severity

- The consequences of a failure mode. Severity considers the worst potential consequence of a failure, determined by the degree of injury, property damage, system damage and/or time lost to repair the failure.

- Remarks / mitigation / actions

- Additional info, including the proposed mitigation or actions used to lower a risk or justify a risk level or scenario.

Introduction

The FME(C)A is a design tool used to systematically analyze postulated component failures and identify the resultant effects on system operations. The analysis is sometimes characterized as consisting of two sub-analyses, the first being the failure modes and effects analysis (FMEA), and the second, the criticality analysis (CA). Successful development of an FMEA requires that the analyst include all significant failure modes for each contributing element or part in the system. FMEAs can be performed at the system, subsystem, assembly, subassembly or part level. The FMECA should be a living document during development of a hardware design. It should be scheduled and completed concurrently with the design. If completed in a timely manner, the FMECA can help guide design decisions. The usefulness of the FMECA as a design tool and in the decision-making process is dependent on the effectiveness and timeliness with which design problems are identified. Timeliness is probably the most important consideration. In the extreme case, the FMECA would be of little value to the design decision process if the analysis is performed after the hardware is built. While the FMECA identifies all part failure modes, its primary benefit is the early identification of all critical and catastrophic subsystem or system failure modes so they can be eliminated or minimized through design modification at the earliest point in the development effort; therefore, the FMECA should be performed at the system level as soon as preliminary design information is available and extended to the lower levels as the detail design progresses.

Remark: For more complete scenario modelling another type of Reliability analysis may be considered, for example fault tree analysis(FTA); a deductive (backward logic) failure analysis that may handle multiple failures within the item and/or external to the item including maintenance and logistics. It starts at higher functional / system level. An FTA may use the basic failure mode FMEA records or an effect summary as one of its inputs (the basic events). Interface hazard analysis, Human error analysis and others may be added for completion in scenario modelling.

Functional analysis

The analysis may be performed at the functional level until the design has matured sufficiently to identify specific hardware that will perform the functions; then the analysis should be extended to the hardware level. When performing the hardware level FMECA, interfacing hardware is considered to be operating within specification. In addition, each part failure postulated is considered to be the only failure in the system (i.e., it is a single failure analysis). In addition to the FMEAs done on systems to evaluate the impact lower level failures have on system operation, several other FMEAs are done. Special attention is paid to interfaces between systems and in fact at all functional interfaces. The purpose of these FMEAs is to assure that irreversible physical and/or functional damage is not propagated across the interface as a result of failures in one of the interfacing units. These analyses are done to the piece part level for the circuits that directly interface with the other units. The FMEA can be accomplished without a CA, but a CA requires that the FMEA has previously identified system level critical failures. When both steps are done, the total process is called a FMECA.

Ground rules

The ground rules of each FMEA include a set of project selected procedures; the assumptions on which the analysis is based; the hardware that has been included and excluded from the analysis and the rationale for the exclusions. The ground rules also describe the indenture level of the analysis, the basic hardware status, and the criteria for system and mission success. Every effort should be made to define all ground rules before the FMEA begins; however, the ground rules may be expanded and clarified as the analysis proceeds. A typical set of ground rules (assumptions) follows:

1.Only one failure mode exists at a time.

2.All inputs (including software commands) to the item being analyzed are present and at nominal values.

3.All consumables are present in sufficient quantities.

4.Nominal power is available

Benefits

Major benefits derived from a properly implemented FMECA effort are as follows:

1.It provides a documented method for selecting a design with a high probability of successful operation and safety.

2.A documented uniform method of assessing potential failure mechanisms, failure modes and their impact on system operation, resulting in a list of failure modes ranked according to the seriousness of their system impact and likelihood of occurrence.

3.Early identification of single failure points (SFPS) and system interface problems, which may be critical to mission success and/or safety. They also provide a method of verifying that switching between redundant elements is not jeopardized by postulated single failures.

4.An effective method for evaluating the effect of proposed changes to the design and/or operational procedures on mission success and safety.

5.A basis for in-flight troubleshooting procedures and for locating performance monitoring and fault-detection devices.

6.Criteria for early planning of tests.

From the above list, early identifications of SFPS, input to the troubleshooting procedure and locating of performance monitoring / fault detection devices are probably the most important benefits of the FMECA. In addition, the FMECA procedures are straightforward and allow orderly evaluation of the design.

Subscribe to:

Comments (Atom)